Who Fears Artificial NON Intelligence ?

Since a bunch of plastic and electronic components beat go game champions, the world is shaken by a new hysterical1 crisis about « Artificial Intelligence. » A peak of delirium has been reached when MEPs have advocated the creation of a special legal personality for robots2. Why not a legal personality for bathroom carpets, while we’re at it ? Why not ? Some do consider them a serious deadly danger … How did we get here ???

Geluck – I say, Artificial Intelligence is good, unless it is to serve natural bullshitting

Geluck – I say, Artificial Intelligence is good, unless it is to serve natural bullshitting

Initially, we have a term – intelligence – that is applied to machines by analogy to human beings, and then, with one thing leading to another, one would have us believe that what machines do better than humans, is intelligence ! Isn’t that a bit of an upside down world ???

What is it that machines can do ?

- Calculate very very quickly

- Store a very large number of information, that is to say representations of the world according to a certain formal modelling

- Find links between representations. Again, we must provide them with those representations.

New forms of world modelling, such as documents indexing to find an entire document based on keywords or graph databases that put forward implicit connections in a large number of data, have certainly allowed remarkable progress. Yet it is much more about the increase in processing speeds and the phenomenal increase in the volumes of storable and calculable data that recent progress are based on, because recent progress rely on statistics. If it’s often called statistical learning, it is by analagy again. Human learning is based on experience, which involves the whole body, whereas machine learning only involves calculations on provided representations.

Three points radically differentiate human intelligence and artificial so called « intelligence ».

Machines are incapable of modeling the world by themselves.

Wining a game of Go means being able to consider a very large number of possibilities and choose the best suited to counter the opponent’s game. It is only a computing power applied to a problem that is ultimately relatively simple for a computer, because it is purely rational. A computer can win a Go game because the rules of the game are modelizable. It is not at all to minimize the work and those who build automatic systems that perform more and more complex tasks. The difficulty lies in modeling the rules of the game so that they can be « learned » by a computer. AlphaGo and its fellow advanced systems are remarkable achievements, but put them in front of high school level math problem and they will not even « know »3 what it is about. While any child has in her what it takes to apprehend and then to solve the problem.

Machines have no sense of initiative.

No matter how good it is at playing Go game, it will not come to AlphaGo’s « idea »3 to learn Korean to better understand the strategy of his opponents nor to found a school to teach the Go game to children. For a machine’s field of action or competence to expanded beyond what was originally intended, a human intervention is necessary. Under these conditions, can we really speak of intelligence ??

Words have meaning. Artificial « intelligence » and machine « learning » are terms based on an analogy with human beings. Even if machines calculate much faster than we do, human « intelligence » and ability to « learn » are not reduced to what these machines can do. Yet the metaphoric use of the terms « intelligence » and « learning » is at the origin of a phantasmatic inflation fed by some sort of Geppetto complex and fascination4.

Machines do not have an emotional and sensitive body.

The conception of intelligence as a pure product of the brain that is reduced to a rational calculation is certainly widespread – probably in part because our elites are trained in schools where only this form of intelligence is developed and valued – but it is totally partial.

Isn’t the ability to decide an ethical or moral issue also part of what is called human intelligence? Same for the ability to create a solution in a situation that we have never encountered. So many situations in which the intelligence to which we appeal to is anchored in the whole of our experience as a sensitive human being.

Having said this, only two choices are possible: either you consider that human beings can be reduced to a fully calculable biological mechanics. In this case, it is still reasonable to consider that, given the entire human complexity that still eludes us, we are still very, very far from being able to reproduce it artificially in all its specificity. The other choice – which is mine – is to consider that human beings can not be reduced to a fully calculable mechanics, that in the human consciousness, something escapes. Consequently, whatever technical advances we make, artificial should always be considered essentially different to human in particular, and alive in general. Question of anthropological vision and ethical position…

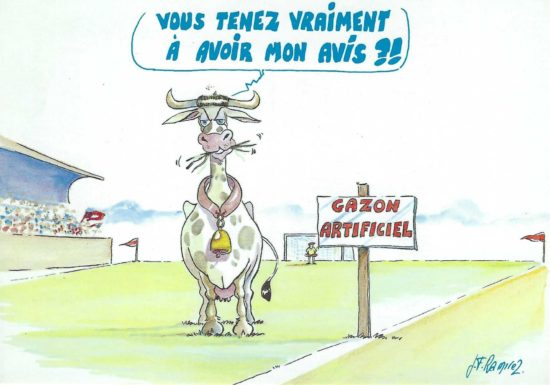

Artificial grass – Do you really want my opinion ?

Artificial grass – Do you really want my opinion ?

Without a prior understanding of what is meant by intelligence, debates on « Artificial Intelligence » are rapidly shifting to discussing the problems of overpopulation on Mars5… One can also ask what is the point of calling intelligence, what in fact is statistical calculations. I can see no explanation other than the quest for hype of involved industrials and researchers. It has the regrettable effect of confusing public debates on the subject. Most distressing is that such debates – relayed by those for whom shaking the red rag of fear raises the audience – obliterate the real questions raised by NT. Rather than worrying about a very, very, very hypothetical disappearance of the human / machine distinction, with SF scenarios of power-taking of machines, we would be much better advised to carefully observe the very very human choices and goals which guide the algorithms and very very current collections of all sorts of data …

Updated 5th jan 2018 and 7th May 2020

1 By « hysterical » I mean that appears totally disproportionate to the reality of the situation. The previous crisis dates back to the 70s. It was followed by some kind of fatwa, in the late 90s and early 2000s when I was working on research in automatic speech recognition : the term IA was completely banned from the vocabulary of the researchers. Shame on the one who pronounced it, because it was associated with the megalomaniac ramblings of researchers who had lost themselves in promises more inspired by Science Fiction than real possibilities …

2 Luckily advisers of the Republic keep their heads on their shoulders … Le CESE n’est pas favorable à la création d’une personnalité juridique pour les robots ou l’IA « CESE does not support the creation of a legal personality for robots or AI ».

3 Another metaphor here, right ? Computers do not know anything, they have no idea. Let’s not get confused by figures of speech…

4 Plus some small commercial interests, of course : Google, the owner of the DeepMind company that designed AlphaGo is not the number one search engine in Asia. In China and Korea, competition against AlphaGo have been seen by tens of millions of people … Intelligence artificielle : les leçons d’une victoire« Artificial Intelligence: Lessons of a Victory »

5 « Fearing a rise of killer robots is like worrying about overpopulation on Mars » Andrew Ng, quoted by Dominique Cardon on 19 January 2017 during the public hearing of the Parliamentary Office for the Evaluation of Scientific and Technological Choices, devoted to AI. La courte intervention de Dominique Cardon, très éclairante sur le sujet à 11:57:45.

Initially written in French, the French version of this article contains references in French

alerte email - nouveaux articles

alerte email - nouveaux articles fil RSS - nouveaux articles

fil RSS - nouveaux articles Véronique Gendner, e-tissage.net

Véronique Gendner, e-tissage.net